Ever since the advent of generative AI, the age-old battle of man versus machine has been looking decidedly one-sided. However, one photographer, intent on making the case for pictures captured with the human eye, has taken the fight to his algorithm-powered rivals – and won.

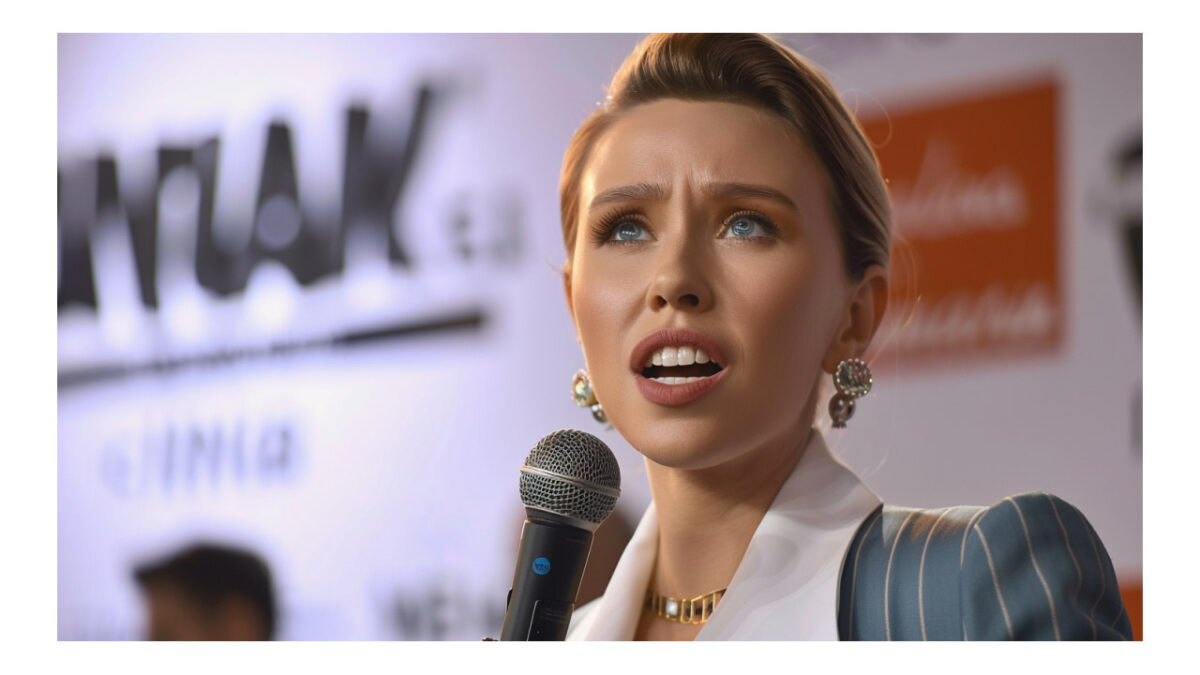

Miles Astray, a 38-year-old photographer, decided to challenge the surge of AI-generated images sweeping through conventional photography contests. In a bold move, he submitted his own human-made image, titled “Flamingone,” to the AI category in the prestigious 1839 Awards. The striking photograph, depicting an orb of pink feathers standing on knobbly legs, managed to convince a panel of judges to award him third place in the AI-generated category.

Astray was motivated to break the rules after witnessing a series of AI-generated images winning traditional photography awards. “It occurred to me that I could twist this story inside down and upside out the way only a human could and would, by submitting a real photo into an AI competition,” he explained. He deliberately chose a surreal and seemingly unbelievable image that could easily be mistaken for an AI creation.

However, once it was revealed that no AI was involved in the making of “Flamingone,” Astray was stripped of his award, which included a cash prize. The bronze medal and people’s choice award were then given to two other creators. “AI can already produce incredibly real-looking content, and if that content meets an unquestioning eye, you can easily deceive entire audiences,” Astray said.

Astray’s act of subversion highlights the growing need for skepticism and vigilance in the face of increasingly realistic AI-generated content. “Up until now, we never had much of a reason to question the authenticity of photos, videos, and audios. This has changed overnight, and we’ll need to adapt to this. It has never been more important to be questioning. That’s an individual responsibility that will be even more crucial than tagging and flagging AI content.”

In a statement, the competition’s organizers acknowledged Astray’s powerful message but maintained that his submission was unfair. “Each category has distinct criteria that entrants’ images must meet. His submission did not meet the requirements for the AI-generated image category. We understand that was the point, but we don’t want to prevent other artists from their shot at winning in the AI category. We hope this will bring awareness (and a message of hope) to other photographers worried about AI.”

Astray’s victory echoes the actions of German artist Boris Eldagsen, who made headlines the previous year by winning a Sony World Photography Award with an AI-generated image. Eldagsen defended his entry in the “creative open” category, arguing that the creation process was complex and involved much more than simply typing in a few words and clicking ‘generate.’

For Astray, the confusion his image caused is precisely the point. “If the amount of seemingly real fakes in circulation keeps increasing, it’ll be hard to keep up with what’s real and what’s not,” he said. “I couldn’t live the life I’m living without technology, so I don’t demonize it, but I think it’s often a double-edged sword with the potential to do both good and harm.”

As the lines between human and AI-generated content continue to blur, Astray’s provocative move serves as a reminder of the unique creativity and unpredictability that only a human can bring to the art of photography.